Web Scrape Reddit to Find New Threats

Reddit Investigation (3)

This posts walks through the process of looking for new and upcoming threats by web scrapping Reddit to find individual posts that could be indicators.

Here we are searching for references to scams related to cryptocurrency.

This is how I initially discovered a “paper wallet” scam based on individual users independently posted about similar experiences being scammed.

The process can be distilled down to 3 sections

First - choose terminology and possibly specific subreddits.

Second - get a Reddit API

Third - paste a python script (with your search terms and API credentials inserted) into Google Colab and hit “go”.

First Section

The process is to first decide what to look for and where. To do this I asked Chatgpt what to search for and it gave the following answer:

Search for Relevant Posts

Use Reddit's search function to find posts with keywords like "scammed," "crypto scam," or "lost money." Target relevant subreddits, such as:

r/CryptoCurrency

r/Scams

r/Bitcoin

r/Ethereum

r/CryptoMarkets

Filter for Scams

Refine results based on the post’s title or body content:

Look for terms like "rug pull," "fake exchange," or "phishing."Second Section

You need to get an API for Reddit. If you’ve already got one, skip to “Third Section”.

Let’s get started on getting the API.

Here’s a detailed guide to creating a Reddit application to get your threeAPI credentials, which are identified as client_id, client_secret, and user_agent.

(before proceeding sign up for a Reddit account and then sign in)

Step 1: Go to Reddit App Preferences

Log in to Reddit:

Go to Reddit and log in with your Reddit account. (https://www.reddit.com/)

Navigate to App Preferences:

Go to Reddit App Preferences. (https://www.reddit.com/prefs/apps)

Step 2: Create a New Application

Scroll to the Bottom:

At the bottom of the page, you’ll see a section labeled "Developed Applications".

Click on "Create App":

Click the Create App or Create Another App button.

Fill Out the Form:

Name: Enter a name for your application (e.g., KeywordScraper).

App Type: Select script (for personal use).

Redirect URI: Enter http://localhost (needed for the API, even if you don’t use it in your script).

Description (Optional): You can add a short description of your app.

About URL (Optional): Leave it blank.

Save the Application:

Click the "Create App" button at the bottom of the form.

Step 3: Get Your API Credentials

Client ID:

After creating the app, you’ll see a "client ID" at the top of the application (a string of characters below the app name).

Client Secret:

The "client secret" is displayed next to the app details.

User Agent:

The user_agent is not provided explicitly. Create a custom string that identifies your app and user, such as:

KeywordScraper/1.0 by YOUR_USERNAME

Replace YOUR_USERNAME with your Reddit username.

So the final output appears like:

client_id="YOUR_CLIENT_ID", # Replace with the client ID you copied

client_secret="YOUR_CLIENT_SECRET", # Replace with the client secret you copied

user_agent="KeywordScraper/1.0 by YOUR_USERNAME" # Replace YOUR_USERNAME with your Reddit username

username="YOUR_USERNAME", # Replace with your Reddit

usernamepassword="YOUR_PASSWORD" # Replace with your Reddit passwordHere is a more visual approach to finding your creds:

You should be at preferences (reddit.com) (https://www.reddit.com/prefs/apps/)

You will see something that looks like the following:

The client_ID is the string under “personal use script”

Secret is next to where it says “secret

user_agent, as previously noted, is

"KeywordScraper/1.0 by YOUR_USERNAME" # Replace YOUR_USERNAME with your Reddit username

Third Section

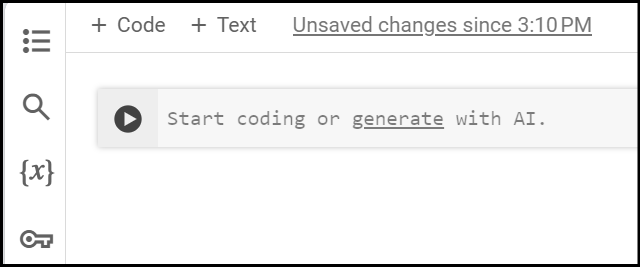

Go to Google Colab (https://colab.research.google.com/) - activate your account

Click "+ New Notebook" in te lower left corner of the pop up window.

You'll see this:

input: "pip install asyncpraw" and then click the arrow on the left side

Then after it runs, click "+ Code" in the top left corner and it will create a new section below to input more code.

Here you will paste the following code but with your creds. you can also change the subreddits and keywords. These are all in the first section at the top.

import asyncio

import asyncpraw

async def main():

# Configure Reddit API credentials

reddit = asyncpraw.Reddit(

client_id="",

client_secret="",

user_agent="",

username="", # Replace with your Reddit username

password="" # Replace with your Reddit password

)

# Subreddits to search

subreddits = ["Bitcoin", "cryptocurrency", "cryptoscams", "CryptoMoonShots", "Scams", "CryptoCurrencyTrading"]

# Keywords to search for

keywords = ["scam", "scammed", "phishing", "rug pull"]

# Iterate through the subreddits

for subreddit_name in subreddits:

print(f"\nSearching subreddit: r/{subreddit_name}")

subreddit = await reddit.subreddit(subreddit_name)

# Fetch the newest posts

async for submission in subreddit.new(limit=10): # Adjust the limit as needed

# Check for keywords in title or text

if any(keyword.lower() in submission.title.lower() or keyword.lower() in submission.selftext.lower() for keyword in keywords):

print(f"\nPost found in r/{subreddit_name}:")

print(f"Title: {submission.title}")

print(f"URL: https://www.reddit.com{submission.permalink}")

if submission.is_self:

print(f"Content: {submission.selftext[:500]}...") # Display the first 500 characters of the content

print("-" * 80)

# Instead of using asyncio.run(), use the following to get the current event loop

# and run the main coroutine until it completes within the existing loop.

await main()The results should look like this:

A follow up post will explain how the code worked.

That’s it!